DB2 and Oracle Database: An Autonomic Computing Comparison

Wikipedia defines autonomic computing as “the self-managing characteristics of distributed computing resources, adapting to unpredictable changes whilst hiding intrinsic complexity to operators and users”. Both IBM and Oracle have added autonomic computing features to their database software products. On 29 September 2011, IBM will host a Chat with the Labs webcast where the hosts will compare the autonomic computing features of IBM DB2 and Oracle Database in the following areas:

Wikipedia defines autonomic computing as “the self-managing characteristics of distributed computing resources, adapting to unpredictable changes whilst hiding intrinsic complexity to operators and users”. Both IBM and Oracle have added autonomic computing features to their database software products. On 29 September 2011, IBM will host a Chat with the Labs webcast where the hosts will compare the autonomic computing features of IBM DB2 and Oracle Database in the following areas:

- Memory Management

- Storage Management

- Utility throttling

- Automatic Configuration

- Automatic Maintenance

You can sign up for the webcast at: DB2 and Oracle Database: An Autonomic Computing Comparison.

Initial Thoughts on New Oracle Database Appliance

I just watched the Oracle Webcast announcing its new database appliance. Here are my initial reactions.

I expected Oracle to announce a mini-Exadata, as had been widely rumored. However, as far as I can see, this is not a mini-Exadata. I don’t have details yet, but it does not appear to contain the Exadata storage-layer software. This is simply an Oracle Database appliance, or an Oracle RAC appliance. Nothing more. In other words, it is the fusion of Oracle software, Oracle hardware, and some support/services.

Because this announcement is really about making Oracle Database easier to deploy, I’m not sure it has much applicability for organizations with an existing Oracle Database set-up, unless they are planning a hardware migration. But judging from how this was presented and positioned, Oracle are probably focusing this product on channel sales, and making this as partner-friendly as possible.

I like that Oracle have followed IBM’s lead and added pay-as-you-grow licensing/pricing. In this appliance, you activate and license CPU cores as needed. Of course, IBM pureScale Application System already offers this. However, IBM does still have an advantage in this regard with its ability to seamlessly add or remove database processing capacity, with a combination of its transparent scaling and daily-based software licensing. In other words, you can purchase the DB2 pureScale database software licenses only for the days where you need the extra capacity. This is a great strategy for eliminating the over-provisioning of database software licenses just to deal with situations where there are significant short-term spikes in demand (like retailers around the holidays, for instance). For more information, check out the “flexible licensing” section of the following blog post: IBM Previews New Integrated System for Transactional Workloads.

Something that’s not clear yet are the growth options for these Oracle Database Appliances. At least from my initial look, there does not seem to be a seamless upgrade path to Exadata. It appears that if someone wants to grow from this appliance, it will not be an insignificant undertaking. Perhaps someone from Oracle can comment on that.

So, in summary, this announcement appears to simply be a packaging exercise by Oracle, where they have created a relatively straightforward database appliance. Your thoughts/reactions?

Why Informix Rules for Time Series Data Management

Informix has had TimeSeries data management capabilities for more than a decade. However, those capabilities are garnering more attention today than ever before. As our world is becoming more instrumented, there is an increasing need to manage data from sensors. And this data from sensors is often being generated at intervals, creating the need for time series analysis.

As I’ve just said, Informix has long had TimeSeries capabilities. However, it wasn’t until recent customer evaluations became public knowledge that the incredible performance of Informix for time series applications became apparent. And now, as a result, Informix is being touted for Smarter Planet-type solutions, including Smart Grid systems.

I mentioned recent customer evaluations. One of those was at ONCOR, a provider of electricity to millions of people in Texas. ONCOR compared Oracle Database and Informix for its temporal data management and analysis needs. They discovered that, for their usage, Informix is 20x faster than Oracle Database when it comes to loading data from Smart Meters. They discovered that Informix is up to 30x faster than Oracle Database for their time series queries. And ONCOR discovered that, before applying data compression, Informix has storage savings of approximately 70% when compared with Oracle Database.

If you are using Oracle Database for a time series application, you should certainly consider Informix. You may significantly improve performance, while at the same time lowering your server, software, and storage costs. The secret sauce is in the way that Informix stores and accesses this time series data. The Informix approach is unique among relational database systems. It stores information that indicates the data source only one time, and then stores the time-stamped values for that source in an infinitely wide column beside it. This approach results in both the storage savings and the huge performance gains.

If you want to read more about Informix and the management of time series data, check out these recent blog posts: This Smart Meter Stuff is for Real and Using Informix to Capture TimeSeries Data that Overwhelms Commodity Databases.

Gartner: IBM DB2’s Maturing Oracle Compatibility Presents Opportunities, with some Limitations

When IBM DB2 first added syntax that is compatible with Oracle Database data types, SQL, PL/SQL, scripting, and more, Gartner wrote their “first take” on the technology in IBM DB2 9.7 Shakes Up the DBMS Market With Oracle Compatibility. More than two years later, Gartner are now following up with a research report on the features. You can read the research report at: IBM DB2’s Maturing Oracle Compatibility Presents Opportunities, with some Limitations. This is not a commissioned report. It is independent research from Gartner.

More Organizations Move up to the Mainframe and DB2 for z/OS

Any of you who are familiar with DB2 on the mainframe (officially known as DB2 for z/OS) know how efficient it is. The mainframe is not for every organization. However, for those organizations for whom the mainframe is a good fit, the tremendous levels of efficiency, reliability, availability, and security directly translate into significant cost savings.

Database software on the mainframe may be relatively boring when compared with the data management flavor of the day (whether it is Hadoop or any of the other technologies associated with Big Data). But when it comes to storing mission-critical transactions, nothing beats the ruthless efficiency of the mainframe. And that boring, ruthless efficiency has been winning over organizations.

Earlier this year, eWeek reported how the IBM Mainframe Replaces HP, Oracle Systems for Payment Solutions. In this article, eWeek describe how Payment Solution Providers (PSP) from Canada chose DB2 for z/OS over Oracle Database on HP servers. A couple of items in this article really catch the eye. One is that the operational efficiencies of the mainframe are expected to lower IT costs up to 35 percent for PSP. The other is that PSP’s system can now process up to 5,000 transactions per second.

Another organization who moved in the same direction is BC Card—Korea’s largest credit card company. The Register ran a story about how a Korean bank dumps Unix boxes for mainframes. BC Card is a coalition of 11 South Korean banks that handles credit card transactions for 2.62 million merchants and 40 million credit card holders in the country. They dumped their HP and Oracle Sun servers in favor of an IBM mainframe. In an accompanying IBM press release, it was revealed that IBM scored highest in every benchmark test category from performance to security to flexibility. Another significant factor in moving to the mainframe is the combination of the utility pricing that lets customers activate and deactivate mainframe engines on-demand, together with software pricing that scales up and down with capacity.

Despite continual predictions to its demise, it has been reported that the mainframe has experienced one of its best years ever, with an increase in usage (well, technically MIPS) of 86% from the same time in 2010. Much of this growth is coming from new customers to the mainframe. In fact, since the System z196 mainframe started shipping in the third quarter of 2010, IBM has added 68 new mainframe customers, with more than two-thirds of them consolidating from distributed systems.

It may not be as exciting as the newest technology on the block, but it is difficult to beat the reliability and efficiency of the mainframe. Especially when you are faced with the realities of managing a relatively large environment, and all of the costs associated with doing so. And don’t forget, the mainframe can provide you with a hierarchical store, a relational store, or a native XML store. And when you combine the security advantages and the 24×7 availability, together with cost efficiency, it makes for an interesting proposition.

Call for Presentations – 2012 DB2 Tech Conference in Denver, Colorado

The deadline for submitting proposals for presentations at next year’s DB2 Tech Conference in Denver, Colorado is fast approaching. Make sure to get your proposals in by 14 October 2011. You can submit your proposals on the International DB2 User Group Web site at Call For Presentations. If you look at the Web site, you will see the list of potential topics, as well as guidelines for the presentations. Essentially, the organizers are looking for presentations on almost every aspect of working with DB2. If you have experiences to share, presenting at the conference is a great way to get a complimentary pass to the conference.

Oracle Press Release Gets the Facts Wrong

Do Oracle check their facts before they issue a press release? Because today there is yet another instance of a blatant mistruth issued by Oracle. This time in an official Oracle press release about an SAP benchmark result. Here is the offending quote:

“Oracle’s superior scalable cluster architecture has full high availability unlike IBM’s that does not scale beyond a single server“

The quote is attributed to Juan Loaiza, Senior Vice President, Systems Technology at Oracle Corporation. Now it is possible that this is not a case of Oracle intentionally trying to mislead the public. It is possible that this is a case of poor fact-checking from Oracle. And if that is the case, then they should have checked yesterday’s SAP benchmark results when an IBM DB2 cluster took top spot in another SAP benchmark.

For the record, IBM DB2 has outstanding scale-out capabilities. IBM DB2 provides both shared-nothing partitioning scale-out capabilities as well as shared-disk clustering scale-out capabilities. Many would argue that IBM DB2 has significantly superior scale-out capabilities when compared with Oracle Database. Especially when it comes to scale-out efficiency.

Note: When this first came to light, I was a little upset. After taking a little time to calm down, I updated some statements in this blog post to tone them down. Thankfully, I probably did this before anyone got a chance to read them 🙂

Industry Benchmark Result for DB2 pureScale: SAP Transaction Banking (TRBK) Benchmark

A couple of years ago, IBM introduced the pureScale feature, which provides application cluster transparency (allowing you to create shared-disk database clusters). At the time, IBM had taken their industry-leading clustering architecture from the mainframe, and brought it to Unix environments. IBM subsequently also brought it to Linux environments.

Today, IBM announced its first public industry benchmark result for this cluster technology. IBM achieved a record result for the SAP Transaction Banking (TRBK) Benchmark, processing more than 56 million posting transactions per hour and more than 22 million balanced accounts per hour. The results were achieved using IBM DB2® 9.7 on SUSE Linux® Enterprise Server. The cluster contained five IBM System x 3690 X5 database servers, and used the IBM System Storage® DS8800 disk system. The servers were configured to take over workload in case of a single system failure, thereby supporting high application availability. For more details, see the official certification from SAP.

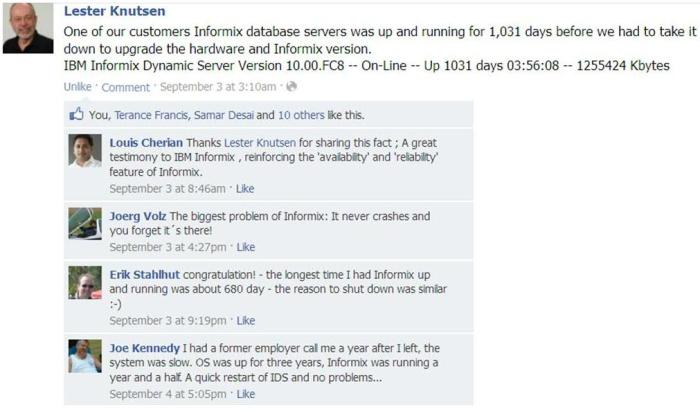

Informix Availability: War Stories

A few years ago, I remember hearing the phrase “set it, and forget it” in relation to Informix. That catch-phrase has stuck with me ever since. Initially, I was intrigued when I heard the phrase simply because I would not have associated the implied level of reliability unless the database was running on a mainframe. But when I talked with Informix users, they were quite passionate in their agreement with this catch-phrase.

Informix has had an interesting history. At one time, it was going toe-to-toe with Oracle Database for database supremacy in distributed environments like Unix. Some would argue that, at the time, Informix was in a good position to win that war. Then the Informix train derailed. But curiously, it wasn’t technology reasons that took Informix off track. It was a series of corporate governance catastrophes combined with a series of poorly chosen and poorly executed acquisitions that stalled the Informix momentum. The Informix technology was never called into question. However, its corporate governance certainly was.

Informix eventually found a home at IBM. And IBM, with its technology-friendly approach to product development, is a good home for a product like Informix. At IBM, Informix is assured of continued investment in its product features. (IBM’s focus on corporate-level advertising, rather than product-level advertising, has left some Informix advocates unhappy with the levels of awareness for Informix, but that is another matter that has been dealt with at length elsewhere in the blogosphere.) One thing that cannot be questioned, however, is the continued investment in the Informix product features. In fact, in the time since the last major release of Oracle Database in 2007, IBM Informix has had two releases (code named Cheetah2 and Panther). In these releases, IBM Informix has added major new features like Flexible Grid, the Warehouse Accelerator, Storage Provisioning, Selective Row-Level Audit, Trusted Context, and more. It has also integrated the Genero feature into the product (for 4GL development). It is a strong testament to Informix that these features have been added during a time when we have seen few new features in Oracle Database.

Through all of its storied history, the Informix technology continues to be alive and well. And users continue to love Informix for its technology, performance, ease-of-use, and reliability. As I’ve said in the past, Informix doesn’t have users, it has fans. And now, the catalyst for this blog post… I recently encountered very tangible evidence of this “set it, and forget it” mantra for Informix in the following Facebook exchange where users share their availability “war stories”. It makes for fascinating reading for DataBase Administrators (DBAs) who work on troublesome systems.

Hadoop Fundamentals Course on BigDataUniversity.com

After spending some time reading about Apache Hadoop, I decided it was time to get my hands dirty. So this weekend, I took the Hadoop Fundamentals 1 self-paced course on BigDataUniversity.com. It is a really nice way to play with Hadoop. You have the choice of downloading the software and installing it on your computer, working with a VMware image, or working in the cloud. I chose the option of working in the cloud. Within a few minutes I had a Amazon AWS account, a RightScale account, and the software installed in the cloud. By the way, although the course is FREE, I did incur some cloud-related usage charges. It amounted to approximately $1 in Amazon charges for the time it took me to complete the course.

The course itself is quite good. It is, as the abstract implies, a high-level overview. It describes the concepts involved in Hadoop environments, describes the Hadoop architecture, and provides an opportunity to follow tutorials for using Pig, Hive, and Jaql. It also provides a tutorial on using Flume. Because of my experience with JavaScript and JSON, I feel most comfortable using Jaql to query data in Hadoop. However, the DBAs among you will probably feel most comfortable with Hive, given its SQL-friendly approach.

If you are curious about Hadoop, I’d recommend this course. I’m eagerly anticipating the availability of the follow-on Hadoop course…

What you need to know about Column-Oriented Database Systems

Column-oriented database systems (Column Stores) have attracted a lot of attention in the past few years. Vendors have quoted impressive performance and storage gains over row-oriented database systems. In some cases, vendors have even claimed as much as 1000x performance improvement.

For many years Sybase IQ led the way for Column Stores. But they have since been joined by a long list of vendors, including Infobright, Paraccel, and Vertica. The claims being made by these vendors are attention-grabbing. But they don’t tell the whole story. Before you run out and get yourself a Column Store, you should be aware of the following:

- Performance issues when queries involve several columns.

Column stores can be faster than row-oriented stores when the number of columns involved is small (it depends on a number of factors, including the number of columns, the use of indexing, the specifics of the query, and so on). However, you can run into performance issues with Column Stores as the number of columns increases, due to the re-composition overhead. In fact, the performance degradation can be quite significant. If your queries involve more than a few columns (either columns for retrieving data or columns for query predicates), you need to be aware of this potential issue. If you are evaluating a column Store, make sure to test queries that involve more than a couple of columns. - Overhead associated with inserting data.

When you create a new data record, you are creating a data row. However, a Column Store does not have a row-orientation. Instead, a Column Store must decompose that data row into the individual column values, and store each of those column values individually. This adds up to a lot more block updates for a Column Store than a row-oriented store. As you can imagine, this is quite a bit of additional work. You should also keep in mind that the values in Column Stores are typically sorted for fast selection and retrieval, which means even more work for data insert and update operations. So what does all this mean? Essentially these limitations make it difficult to have real-time or near real-time data analysis with Column Stores (unless the Column Store vendor uses an approach like Vertica where they have an “update area” in memory that is essentially a row store cache, where real-time inserts are first written, then asynchronously written to disk).

Column Stores are great as analytic data marts where queries do not involve many columns. In such situations, you can enjoy performance gains. However, for more involved usage, you may run into issues. For instance, a Column Store is almost certainly not up to supporting thousands of simultaneous users and mixed query workloads, which are common in Enterprise Data Warehouse (EDW) environments. Sometimes people can get blinded by Column Store success for relatively simple data mart environments. You should be aware that these performance gains do not necessarily translate to larger, more complex environments. In fact, they may not even translate to other simple data marts with different schemas, or where your queries involve more than a couple of columns. The bottom line here is that you need to know both the benefits and the limitations of a Column Store, and make the right decision for your particular situation.

IBM DB2 versus Oracle Database for OLTP

This is a presentation I put together a while ago, but for the most part the information still applies, and I thought some of you may find it interesting or relevant.

IBM Smart Analytics System vs. Oracle Exadata for Data Warehouse Environments

Here is a video where Philip Howard, Research Director at Bloor Research, evaluates performance, scalability, administration, and cost considerations for IBM Smart Analytics System and Oracle Exadata [for data warehouse environments]. This video is packed with great practical advice for evaluating these products.

Oracle Exadata vs. IBM pureScale Application System for OLTP Environments

Philip Howard, Research Director at Bloor Research, recently evaluated the performance, scalability, administration, and cost considerations for the leading integrated systems from IBM and Oracle for OnLine Transaction Processing (OLTP) environments. Here is a summary of his conclusions:

And here is a video with his evaluation. It is packed with practical advice regarding storage capacity, processing capacity, and more.

Are IBM DB2 and Oracle Database NoSQL Databases?

The NoSQL movement has garnered a lot of attention recently. It has been built around a number of emerging highly-scalable non-relational data stores. The movement is also providing a real lease of life for smaller non-relational database vendors who have been around for a while.

Last week, I noticed an entire track for XML and XQuery sessions at the recent NoSQLNow Conference in San Jose. If XML databases and XQuery are key constituents of the NoSQL world, does that mean that IBM DB2 and Oracle Database should be included in the NoSQL movement? After all, both IBM DB2 and Oracle Database store XML data and provide XQuery interfaces. Of course, I’m not being serious here. I don’t believe that the bastions of the relational world should be included in the NoSQL community. Are native XML databases, which have been around for a while, really in the spirit of the NoSQL movement? What’s your opinion?

I believe that the boundaries of the NoSQL community are perhaps a bit looser than they should be. Essentially, absolutely everything except relational databases are being grouped under the NoSQL banner. I can understand how this has happened, but do the NoSQL community really want to dilute their message by including all of these technologies, most of which have been around for quite some time and had relatively limited traction. In the spirit of what I believe is at the genesis of the current NoSQL movement, I reckon that a NoSQL solution should have the following characteristics:

– Not be based on the relational model

– Have little or no acquisition cost

– Be designed to run on commodity hardware

– Use a distributed architecture

– Support extreme or Web-scale databases

Notice that I don’t include a characteristic based on lack of consistency. I reckon that, over time, consistency will become a characteristic of some NoSQL environments.

By the way, earlier in this blog post I referred to the XML and XQuery capabilities in IBM DB2 and Oracle Database. In case you are curious, there is a significant difference in how DB2 and Oracle Database have incorporated XML capabilities in their respective products, with Oracle essentially leveraging their existing relational infrastructure to provide several ways to store XML data, while IBM built true native XML storage capabilities into its product. In other words, DB2 is indeed a true “native XML store”. In the past, I used to blog about native XML storage over at www.nativeXMLdatabase.com, before handing the reigns over to Matthias Nicola. If you want a little more insight on XML support in Oracle Database, check out XML in Oracle 11g and Why Won’t Oracle Publish XML Benchmark Results for TPoX?